Compiler Optimizations Undo Source-Level Formal Verification Guarantees

A Back-of-the-Evelope Attacker Work Effort Analysis

Executive Summary:

Recent 2024 ETHZ research reveals that common compiler optimizations, intended to enhance performance, can inadvertently introduce timing side-channel vulnerabilities into cryptographic libraries, even those formally verified at the source code level.

While the ETH Zurich study thoroughly details the prevalence and technical aspects of these vulnerabilities across various architectures and compiler configurations, this brieg note specifically aims to quantify the potential ease of exploitation using the Cyber Independent Testing Lab's (CITL) 2016 Hardening Line Metric. By applying this metric to the vulnerabilities identified in the ETH Zurich research, this note provides a back-of-the-envelope quantitative assessment of the effort required by an attacker to successfully exploit these vulnerabilities.

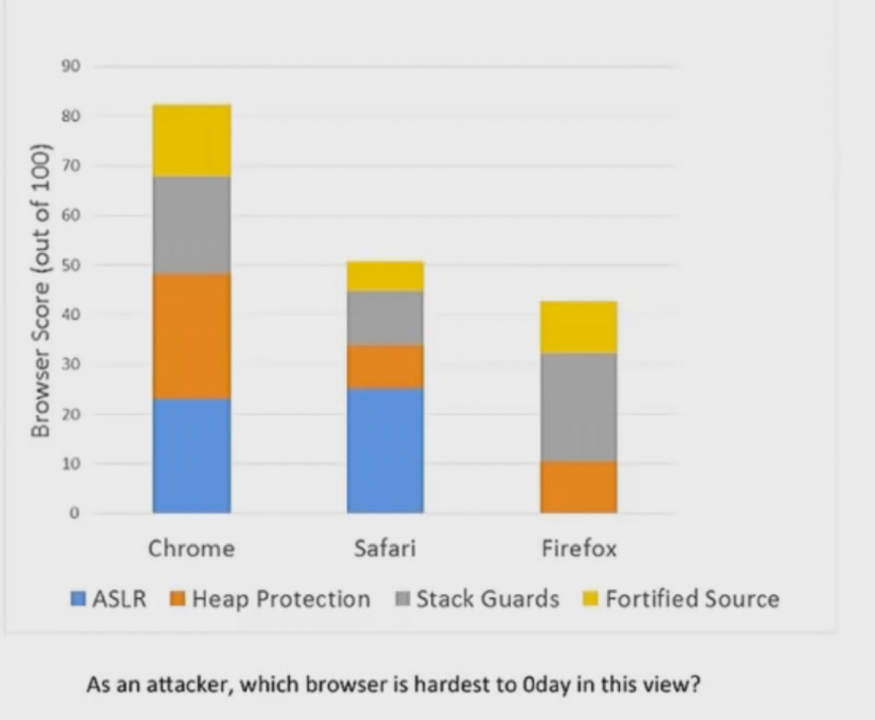

Browsers hardenings: CITL scores factors makeup

Browsers hardenings: CITL scores factors makeup

Technical Analysis:

1. Compiler Optimizations and Side-Channel Leakage:

Modern compilers, including GCC and LLVM, utilize a variety of optimization techniques aimed at enhancing code efficiency. However, these optimizations can unintentionally introduce secret-dependent variations in execution time or memory access patterns, resulting in timing side-channel leakage. The research has identified three primary categories of problematic optimizations that contribute to this issue.

First, bitmask arithmetic transformation occurs when compilers replace bitmask arithmetic—a technique typically used for constant-time operations—with conditional branches or memory accesses. This transformation can lead to timing variations that depend on the secret data involved in the bitmask calculation. Second, arithmetic shortcut insertion involves the compiler inserting branches to bypass seemingly redundant computations based on assumptions regarding the input data. In cryptographic contexts, even a single skipped instruction can inadvertently leak information about the secret key. Lastly, complex branch decomposition occurs when compilers break down intricate branching conditions into multiple simpler branches. Although this decomposition maintains logical equivalence, it can expose information about the original condition through the sequence in which branches are executed

2. Microarchitectural Features to Exploit:

The observed vulnerabilities exploit microarchitectural features that exhibit timing variations based on data values or execution flow, including branch prediction units, cache hierarchy, and instruction pipelines. Secret-dependent branches can influence the accuracy of branch prediction, leading to measurable timing differences. Similarly, secret-dependent memory accesses can affect cache hit rates, making them susceptible to cache side-channel attacks. Additionally, variations in instruction execution time due to data dependencies or pipeline stalls can leak information about the processed data. These vulnerabilities enable various side-channel attacks, such as cache timing attacks, where attackers monitor cache access patterns to infer secret data used in memory operations; branch prediction attacks, which exploit branch prediction behavior to deduce information about secret-dependent control flow; and timing analysis attacks, where attackers measure execution time variations to extract sensitive information, even if the leakage is subtle.

4. Quantitative Analysis:

The research conducted a large-scale analysis, encompassing:

- 8 Cryptographic Libraries: HACL*, Libsodium, Botan, BearSSL, BoringSSL, OpenSSL, WolfSSL, MbedTLS

- 6 Architectures: x86-64, x86-i386, armv7, aarch64, RISC-V, MIPS-32

- 17 Compiler Versions: GCC 5-13, LLVM 5-18

- 7 Optimization Levels: -O0, -O1, -O2, -O3, -Ofast, -Os, -Oz

The study identified vulnerabilities in 8.2% of binaries exhibiting secret-dependent control flow and 6.6% with secret-dependent memory access. Notably, vulnerabilities were found even in libraries formally verified at the source code level and across all tested architectures, compiler versions, and optimization levels.

5. Unexpected Findings:

Several unexpected findings highlight the insidious nature of compiler-induced vulnerabilities:

-

Ubiquitous Vulnerability: The study identified vulnerabilities across a broad spectrum of architectures, compiler versions, and optimization levels, indicating that this is not an isolated issue affecting niche systems or specific compiler configurations.

-

Formal Verification Is Not a Panacea: Even libraries like HACL*, which have undergone rigorous formal verification at the source code level, were found to be susceptible to compiler-induced timing side-channel leaks.

-

Optimization Levels Offer No Guarantee of Safety: Contrary to common belief, disabling optimizations (e.g., using -O0) does not guarantee the absence of timing side-channel vulnerabilities. The research discovered vulnerabilities even at the lowest optimization level.

6. Vulnerabilities, Attacker Effort (approx. CITL Hardening Metric):

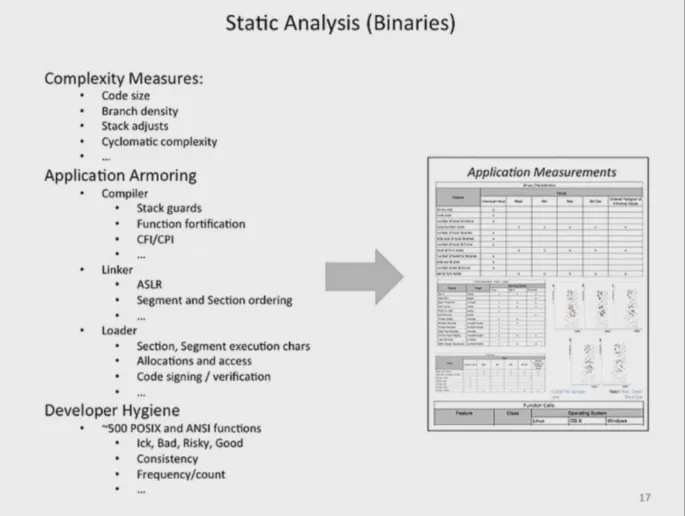

The identified vulnerabilities have varying impacts on attacker effort, which can be understood through the CITL Hardening Metric developed 2015-2018 by the founders of the Cyber Independent Testing Lab (CITL) [3]. This metric offers a structured approach to evaluate software security based on measurable attributes, quantifying the difficulty for an attacker to successfully exploit vulnerabilities.

Various static analysis features and safety measures may be present in software binaries, such as Address Space Layout Randomization (ASLR), which randomizes memory addresses to complicate code location predictions for attackers; Data Execution Prevention (DEP), which prevents code execution from non-executable memory regions; Control Flow Integrity (CFI), which ensures that the program's execution flow cannot be hijacked; and stack guards, which protect against buffer overflow attacks by detecting stack manipulations.

The scoring system penalizes binaries for lacking critical security features, with each missing feature resulting in a deduction from the overall score. A higher score indicates lower attacker effort, suggesting that the software is more secure.

BoE Estimation of Attacker Work Effort

Vulnerability #1 (HACL secp256): This vulnerability, caused by replacing bitmask arithmetic with conditional moves or branches, primarily weakens CFI and potentially ASLR. A skilled attacker with local access and specialized cache-timing tools could likely exploit this in a few days. Remote exploitation is more challenging but might be feasible for highly skilled attackers with weeks or more of effort. The estimated CITL score reduction is -20 for CFI and potentially another -20 for ASLR, depending on its implementation.

Vulnerability #2 (Botan AES-GCM GHASH): This vulnerability, caused by replacing XOR-with-carry with a conditional branch, directly bypasses CFI, leading to a CITL score reduction of -20. A skilled attacker with remote access and high-precision timing measurements could potentially extract the key with relatively low effort (perhaps a few hours of work). Less skilled attackers with local access might require days or more.

Vulnerability #3 (BoringSSL Complex Branching): This vulnerability, caused by splitting complex branching conditions, might only slightly weaken CFI, resulting in a minor CITL score reduction (perhaps -5 to -10). Exploitation would likely require a highly skilled attacker with local access, advanced statistical analysis tools, and significant time and resources (weeks or even months). Remote exploitation is improbable due to the weak signal and challenges of precise timing measurements over a network.

Vulnerability #4 (BoringSSL Generic Bignum Implementation): This vulnerability, caused by the compiler's failure to optimize away secret-dependent branches when optimizations are disabled (-O0), leads to a direct CFI bypass and a significant CITL score reduction of -20. This vulnerability significantly lowers the bar for attackers, making exploitation possible even for less skilled attackers with local access and moderate effort. Remote exploitation might also be feasible depending on the context.

Correlation with Attacker Work Effort: The Hardening Metric empirically correlates with the work effort required by attackers to exploit vulnerabilities [4].

| Scenario | Adversary Capabilities | Estimated Attacker Work Effort | CITL Impact |

|---|---|---|---|

| HACL secp256 (Conditional Move) | Skilled attacker with local access and specialized cache-timing tools | A few days | CFI (-20), potentially ASLR (-20) |

| Highly skilled attacker with remote access and high-precision timing measurements | Weeks or more | CFI (-20), potentially ASLR (-20) | |

| Botan AES-GCM GHASH (XOR-with-carry) | Skilled attacker with remote access and high-precision timing measurements | A few hours | CFI (-20) |

| Less skilled attacker with local access and basic timing measurement tools | Days or more | CFI (-20) | |

| BoringSSL Complex Branching (Point Addition) | Highly skilled attacker with local access, advanced statistical analysis tools, and significant resources | Weeks or even months | Minor CFI weakening (-5 to -10) |

| Remote exploitation | Improbable (but not impossible) | Minor CFI weakening (-5 to -10) | |

| BoringSSL Generic Bignum Implementation (-O0) | Less skilled attacker with local access | Moderate effort | CFI (-20) |

| Remote exploitation | Potentially feasible depending on context | CFI (-20) |

References

[1] Moritz Schneider, et al. "Breaking Bad: How Compilers Break Constant-Time Implementations." arXiv preprint arXiv:2410.13489 (2024).

[2] Xavier Leroy. "Formal verification of a realistic compiler." Communications of the ACM 52.7 (2009): 107-115.

[3] Cyber Independent Testing Lab. "About Methodology." https://cyber-itl.org/about/methodology/

[4] Daniel Bilar. "Attack Work Effort: Transparent Accounting for Software in Modern Companies." Medium. (2016)

Appendix

Risk in cryptography is utterly dominated by key management -Daniel Kaminsky A"H"

Work Effort: Several specific factors influence the ease or difficulty of exploiting one vulnerability compared to another, with signal strength being a critical element. A strong signal reduces noise and minimizes the number of measurements required for successful exploitation. One factor affecting signal strength is the nature of the leaked information; for instance, a carry bit in a key schedule calculation represents a more direct leak than a branch decision in a point addition, making it easier to exploit. The frequency of operations plays a significant role, as more frequently executed operations amplify the signal; for example, scalar multiplication occurs more often than point addition, enhancing its exploitability. Some architectures are also inherently more "leaky" than others, providing stronger side-channel signals that facilitate exploitation.

Verified Binaries: One potential solution to mitigate compiler-induced vulnerabilities is the adoption of formally verified compilers such as CompCert [2]. CompCert offers robust assurances regarding the correctness of the compiled code, including the maintenance of constant-time properties. Despite its strong security guarantees, CompCert has not achieved widespread industry adoption, primarily due to its providing support for a more limited range of architectures compared to mainstream compilers like GCC and LLVM, notably lacking support for RISC-V and MIPS, which were identified as particularly vulnerable in the study. CompCert also tends to generate less optimized code than its counterparts.

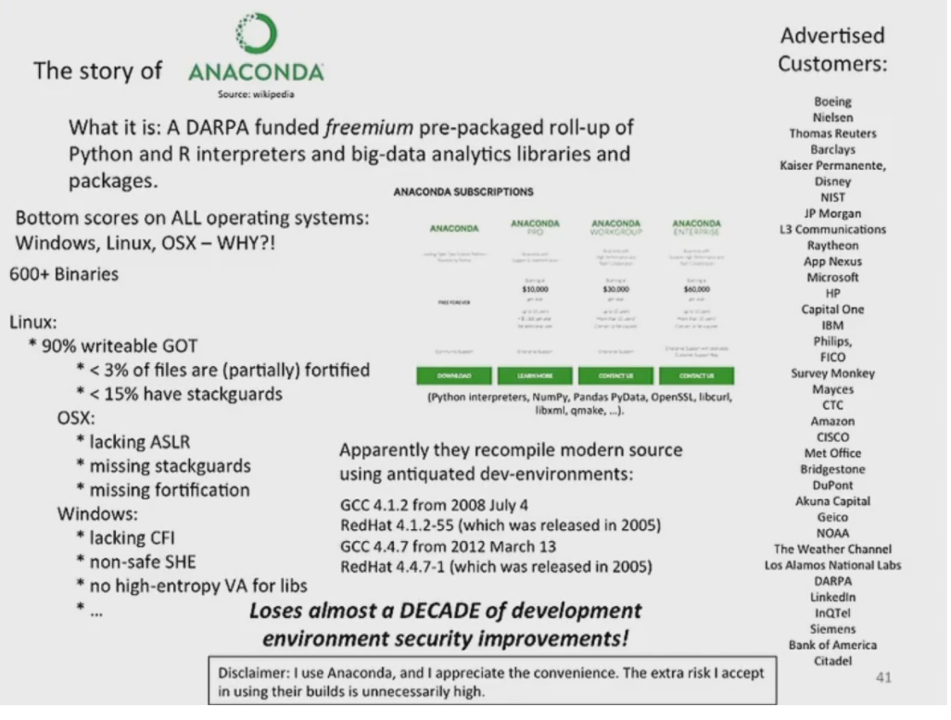

postscriptum

Anaconda is a DARPA-funded freemium, pre-packaged distribution of the Python and R programming languages, bundled with popular data science and big data analytics libraries. A 2016 analysis by the Cyber Independent Testing Lab (CITL) revealed alarming security shortcomings in Anaconda's binaries across Linux, OSX, and Windows platforms. The findings highlighted missing or inadequate implementations of crucial security features such as Address Space Layout Randomization (ASLR), stack guards, fortification, and Control Flow Integrity (CFI). Notably, Anaconda was found to be using outdated development environments, relying on compiler versions released almost a decade prior, which contributed to these vulnerabilities. Prominent users of Anaconda include sensitive U.S. government agencies such as DARPA and Los Alamos National Labs, as well as major corporations like Boeing, Microsoft, IBM, and JP Morgan. Other notable companies include Disney, Capital One, and Intel.

≡ 🪐(🔐🔤)∪(💭📍)∖(📚🔍)⟨CC BY-SA⟩⇔⟨🔄⨹🔗⟩⊇⟨👥⨹🎁⟩⊂⟨📝⨹🔍⟩⊇⟨🔄⨹🔗⟩⊂⟨📚⨹🔬⟩⊇⟨🔄⨹🔗⟩🪐

(📜🔥 Created by HKBH w dyb as grateful vessel, in mercy 📜🔥)