אם ירצה ה׳

2024 GAMESEC The Price of Pessimism for Automated Defense paper

2012 Adversarial Dynamics Conficker Case paper

ORNL 2013 talk slides

CyCon 2011 Using a novel behavioral stimuli-response framework to Defend against Adversarial Cyberspace Participants paper

Keywords: Computer security, Game theory, Reinforcement learning, Automated threat response, Attacker modeling, Adaptive defense systems

Interplay between attacker knowledge and defender strategy: From 2012 real-world to 2024 formal quantitative framework

TL;DR: In cybersecurity, training AI defense agents to assume the worst-case attacker knowledge (pessimistic approach) can lead to suboptimal performance compared to assuming more realistic, limited attacker knowledge (optimistic approach). This finding resonates with observations from a 2012 study on the Conficker worm.

In essence, the 2012 Conficker study provides a real-world case study that supports the 2024 paper's findings about the "price of pessimism". The CWG's experience shows that overestimating the attacker's knowledge and capabilities can lead to inefficient and less effective defense strategies. The 2024 paper formalizes this intuition within a simulated environment and demonstrates its validity using reinforcement learning techniques, offering a more general and quantitative perspective on the importance of accurate attacker modeling in automated cyber defense.

2024 The Price of Pessimism for Automated Defense

Application: The 2024 research explores how different assumptions about attacker knowledge, when building AI agents for automated cyber defense, affect the agent's effectiveness. It aims to improve the design and training of such agents for better real-world cybersecurity outcomes.

Unexpected Findings:

- Optimism Wins: Contrary to the common cybersecurity practice of preparing for the worst, AI defense agents trained with an optimistic attacker model perform significantly better on average than those trained pessimistically. In simulated evaluations, the optimistic defender achieved an average reward of 790 against a zero-knowledge attacker, compared to the pessimistic defender's -124.

This contrasts with the common "prepare for the worst" mentality often seen in cybersecurity, as illustrated by the initial responses to the Conficker worm. The early attempts to contain Conficker by blocking access to the GeoIP database, while seemingly prudent, ultimately proved less effective than later, more adaptive strategies.

- Learned Defense is General: Defenders trained against learning attackers proved remarkably effective against a purely algorithmic attacker (NSARed) they had never encountered during training, with average rewards exceeding 990 and 959 for optimistic and pessimistic defenders, respectively.

This finding is important because it suggests that training against adaptive, intelligent adversaries creates more robust defenders. They don't just learn to counter specific tactics but develop a more general ability to detect and respond to a broader range of threats, even those not explicitly encountered during training. This supports the central argument in Saltaformaggio and Bilar 2011 that effective cyber defense requires a deep understanding of attacker behavior and an adaptive response strategy.

- Pessimism Leads to Local Optima: The pessimistic training approach makes the defender agent overly reactive, focusing on expensive "restore node" actions, which hampers their long-term ability to adapt and learn better strategies. This is evidenced by the 41.85% usage of "restore node" action by pessimistic agents compared to 33.4% for optimistic agents.

The overuse of expensive "restore node" actions (i.e., completely cleaning and recovering a compromised machine) mirrors some of the early responses to Conficker, where significant resources were poured into cleaning infected systems. This reactive approach can be costly and may prevent the defender from developing more sophisticated, long-term strategies.

Rationale for Unexpected Findings:

- Overestimation Penalty: Assuming the attacker has full knowledge leads the defender to overestimate the threat, causing them to overreact and waste resources on unnecessary actions, hindering their learning of more subtle and effective strategies.

- Generalization Power: Training against learning attackers forces the defender to adapt to a wider range of strategies, making them robust even against unseen algorithmic attacks.

- Exploration vs. Exploitation: Pessimism encourages exploitation of immediate threats (restore node) at the expense of exploring better long-term strategies, trapping the defender in local optima.

Approach:

- Model Definition: Define an SBG model representing attacker-defender interaction on a computer network, incorporating noise (false positives) and varying attacker knowledge assumptions.

- Prior Establishment: Train attacker agents in optimistic and pessimistic settings to obtain prior probability distributions over their actions.

- Agent Training: Train defender agents against optimistic and pessimistic learning attackers using PPO within the modified YT environment.

- Evaluation: Evaluate trained defenders against both learning and algorithmic (NSARed) attackers across various scenarios, measuring rewards and analyzing action distributions.

- Bayes/Hurwicz Integration: Incorporate the Restricted Bayes/Hurwicz criterion into the attacker's decision-making process, evaluating its impact on attacker and defender performance.

Results and Evaluation:

- Optimistic Defender Superiority: The optimistic defender consistently achieves significantly higher average rewards (790 vs -124 against zero-knowledge attackers, 766 vs -651 against full-knowledge attackers) and less variance in rewards across different evaluation runs (see interquartile mean rewards in Figure 4).

- Effectiveness against NSARed: Both optimistic and pessimistic defenders perform well against the NSARed agent (>900 average rewards), showcasing the generalization ability of learned defense strategies.

- Pessimistic Action Bias: The pessimistic defender overuses the "restore node" action (41.85%), incurring higher costs and limiting adaptability compared to the optimistic defender's more balanced action usage (Table 3).

- Bayes/Hurwicz Impact: The use of Bayes/Hurwicz generally slightly harms the zero-knowledge attacker's performance against optimistic defenders, and benefits the full knowledge attacker against the same. Interestingly, against pessimistic defenders, the pure zero-knowledge attacker achieves the highest interquartile mean reward of 23144, greatly exceeding both Bayes/Hurwicz and full knowledge attacker.

Practical Deployment and Usability:

- Rethink Worst-Case Assumptions: The results challenge the common "prepare for the worst" approach, suggesting a more nuanced, probability-based approach can be more effective.

- Focus on Learning and Adaptability: Train defense agents against diverse and adaptive attackers to foster robustness against both known and unknown attack strategies.

- Cost-Benefit Analysis: Evaluate action costs carefully and avoid overly relying on expensive actions like "restore node" that can hinder long-term learning.

Limitations, Assumptions, and Caveats:

- Simplified Environment: The modified YT environment, though more detailed than some previous work, still simplifies real-world networks and attacker behavior.

- Limited Action Space: The defender's action space is relatively limited, and more complex actions might be needed for real-world scenarios.

- Training Instability: Training reinforcement learning agents in partially observable environments can be unstable, and the chosen training parameters and duration might not represent optimal configurations.

Conflict of Interest: The research is funded by the Auerbach Berger Chair in Cybersecurity, which might introduce a bias towards certain research outcomes or defensive strategies.

Connections to the 2012 Conficker Study

The 2024 pessimism paper and the 2012 Conficker study are linked by the core concept of attacker knowledge and its impact on defender strategies. By formalizing attacker knowledge levels and quantifying their impact on defender performance, the 2024 research provides a theoretical framework for understanding the dynamics observed in the 2012 Conficker case. While not explicitly framing it in terms of "attacker knowledge," the 2012 analysis highlighted several aspects directly relevant to this concept:

- Conficker's Adaptive Behavior: The 2012 study meticulously documented Conficker's evolving tactics, including its propagation methods, update mechanisms, and armoring techniques. These adaptations suggest a degree of attacker awareness of the defender's actions and available defenses, implicitly acknowledging the role of attacker knowledge in shaping offensive strategies. The 2024 research formalizes this notion by explicitly modeling different levels of attacker knowledge.

- Defender's Reliance on Information and Coordination: The 2012 study emphasized the crucial role of information sharing and coordinated action within the Conficker Working Group (CWG) in mitigating the worm's spread. This highlights the importance of the defender's understanding of the attacker's capabilities and knowledge, a central theme in the 2024 research. The success of blocking update domains, though reliant on external forces outside of pure defense, reinforces this idea.

- Myopic Moves and Missed Opportunities: Both the Conficker developers and the defenders made "myopic" moves – decisions that seemed reasonable in the short term but had unintended long-term consequences. Conficker's reliance on the external GeoIP service, for example, provided an attack vector for defenders. This aligns with the 2024 findings, where the pessimistic defender's overreaction to perceived threats led to suboptimal long-term outcomes. Both studies emphasize the need for strategic thinking and anticipating the adversary's potential responses.

Closing Note:

The optimistic defender's success in the 2024 simulations can be interpreted as a validation of the more nuanced and adaptive approach implicitly adopted by the CWG in the real-world Conficker scenario. This suggests that moving beyond worst-case assumptions and focusing on likely attacker knowledge can lead to more effective and robust cyber defenses, specifically developing adaptive defensive strategies that balance short-term remediation with long-term learning.

Key Terms:

- Automated Defense: Using AI agents to automatically detect and respond to cyberattacks.

- Security Orchestration, Automation, and Response (SOAR): Rule-based systems that automate specific security tasks, but lack adaptive learning.

- Stochastic Bayesian Game (SBG): A game-theoretic model representing the interaction between an attacker and defender in a cybersecurity scenario with uncertainty and hidden information.

- Zero-Knowledge Attacker: An attacker with limited knowledge of the target system's vulnerabilities and defenses.

- Full-Knowledge Attacker: An attacker with complete knowledge of the target system.

- Price of Pessimism: The cost (in terms of performance) of assuming a worst-case attacker model.

- Restricted Bayes/Hurwicz Criterion: A decision-making framework for handling uncertainty, balancing prior beliefs with worst-case scenarios using a pessimism coefficient.

- Proximal Policy Optimization (PPO): A reinforcement learning algorithm used to train the AI agents in this research.

- YAWNING-TITAN (YT): A cybersecurity reinforcement learning environment used as a basis for the simulations in this study.

- NSARed Agent: A programmatic, non-learning attacker agent used as a benchmark.

- Restore Node: Specific action the defender AI agent can take returning a compromised computer (node) on the network to its original, uncompromised state (eg removing malware, resetting configurations, restoring data).

- Adversarial Machine Learning: A field of research that studies the vulnerabilities of machine learning models to attacks and develops defenses against them. This is relevant because the 2024 paper explores this concept in the context of cybersecurity.

- Cybersecurity Modeling: The process of creating mathematical or computational models to represent cybersecurity systems and scenarios, allowing researchers to analyze vulnerabilities, simulate attacks, and evaluate defense strategies.

- Partially Observable Environment: A situation where the agent (defender) has incomplete information about the state of the environment (network). This is a key characteristic of the SBG model used in the 2024 paper, reflecting the real-world challenges of cybersecurity, where attackers often try to hide their actions.

- State Space: The set of all possible states (configurations) a system (network) can be in. Understanding the state space is crucial for modeling and analyzing complex systems.

- Action Space: The set of all possible actions an agent (attacker or defender) can take.

- Reward Function: A mathematical function that defines the reward an agent receives for taking specific actions in specific states. Reinforcement learning algorithms use reward functions to guide the agent's learning process.

- Policy: A strategy that defines what action an agent should take in each possible state. Reinforcement learning aims to find an optimal policy that maximizes the agent's cumulative reward.

- Convergence: In reinforcement learning, convergence refers to the process where the learning algorithm settles on a stable policy that consistently achieves good performance.

- Local Optimum: A situation where a learning algorithm gets stuck at a suboptimal policy, even though better policies might exist. The 2024 paper suggests that pessimistic training can lead to local optima.

- Exploration vs. Exploitation: A fundamental trade-off in reinforcement learning, where the agent must balance exploring new actions and strategies with exploiting actions that have previously yielded good results.

≡ 📜🔥 (🧠 ∪ 📚) ∩ (✡️ ∪ 🕎) ∖ (❓ ∪ 🚫) ⟨הלכה⟩ ⇔ ⟨🔄 ⨹ 🗣️⟩ ⊇ ⟨👴 ⨹ ❤️⟩ ⊂ ⟨📝 ⨹ ⚖️⟩ ⊇ ⟨🔄 ⨹ 📚⟩ ⊂ ⟨🕍 ⨹ 🌍⟩ ⊇ ⟨🔄 ⨹ 🙏⟩ 📜🔥

(📜🔥 Créé par HKBH avec dyb comme un récipient reconnaissant, dans la miséricorde 📜🔥)

postscriptum:

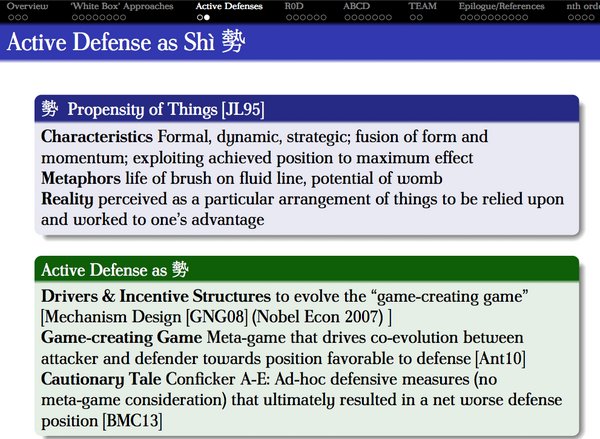

dyb talk .sg MINDEF (2013) Slide "Active Defense as Shi 勢"

dyb talk .sg MINDEF (2013) Slide "Active Defense as Shi 勢"